Wednesday, Aug 3, 2016

SEO for JavaScript applications, 2016 edition

For a long time, SEO recommendations have centered around having plain plain text version of your content whenever possible and avoiding dynamically generated content, Ajax and JavaScript. This should no longer be the case for the major search engines, especially Google. Or should it?

Traditionally, search engine crawlers were only looking at the raw textual content contained within the HTTP response body and didn’t really interpret what a typical browser running JavaScript would interpret. When pages that have a lot of content rendered by JavaScript started showing up, Google started crawling and indexing it as early as 2008, but in a rather limited fashion. Ajax crawling scheme was a standard, albeit clunky solution for this problem up until now. Google was putting a lot of work into a more elegant approach to understand web pages better, and finally, on Oct 14, 2015 they officially announced that the AJAX crawling scheme is now deprecated. In their own words:

We are no longer recommending the AJAX crawling proposal we made back in 2009.

No more unpredictable, minimum-scale crawling attempts. It’s official, they should now support the whole thing. After all, [some of the detailed and comprehensive tests done almost a year ago]((http://searchengineland.com/tested-googlebot-crawls-javascript-heres-learned-220157) showed that Google can now handle JavaScript as never before, including redirects, links, dynamically inserted content, meta data and page elements. So [can we trust Google]((http://searchengineland.com/can-now-trust-google-crawl-ajax-sites-235267) to completely crawl Ajax-based sites? Unfortunately, the answer is - no, at least not yet.

According to our experience, while most of the JavaScript-based functionality is now understood by Googlebot, it consistently fails to crawl the content retrieved by XMLHttpRequest API from an external source - or anything built on top of, or related to this API. This behavior is present in pure JavaScript, jQuery, AngularJS, or other modern JavaScript frameworks. Whenever you need to pull content from an “external” URL or call a REST API endpoint to fetch some data, chances are that it will not be crawled and indexed properly. This introduces huge implications for applications working with any sort of Backend-as-a-Service (BaaS) or similar infrastructure. Server-side rendering would be required to resolve this, and while it is supported in some of the JavaScript frameworks (like React), we need a universal solution until it is widely available.

Here is an example. Take a look at one of the simplest Baasic blog starter kits. It is built using AngularJS and pulls a list of blog posts from our API, rendering it in within a simple layout. Everything seems to be in order, isn’t it?

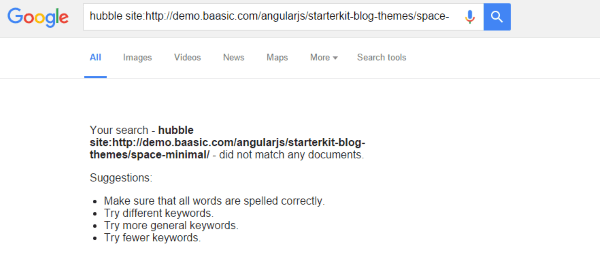

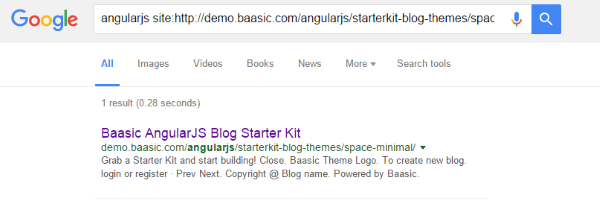

However, this is what happens when we try to find a word from an individual post in the Google search results:

The static content of the page itself is indexed, but the dynamically generated stuff is missing - here is a proof for that:

So, while Google is generally able to render and understand your web pages like modern browsers, it is not currently covering all possible scenarios. This is obviously not acceptable for production environments, so let’s go a step back and revisit the existing standard.

Ajax crawling scheme

Google and other advanced search engines support the hashbang (!#) URL format, which was an original method of creating permalinks for JS applications. Whenever a crawler sees the URL containing the hashbang -

http://www.site.com/#!/some/page

it will transform the URL into

http://www.site/?_escaped_fragment_=/some/page

letting you know that you should handle the response differently inside your JS application. On most occasions, you will serve the prerendered HTML snapshot to the crawler, saving it from the effort of parsing and executing JavaScript on its own.

The newer HTML5 pushState doesn’t work the same way, as it modifies the browser’s URL and history. If you are using pushState, you should use the following tag in the header of your pages:

<meta name="fragment" content="!">

This tells the Googlebot to revisit the site using the ?escaped_fragment= in the URL.

Don’t be confused if you have read that Google doesn’t support the Ajax crawling scheme anymore. They are not recommending it, but it is still supported, and it will stay that way for the foreseeable future.

Using one of the existing commercial services is the easiest way to start using this approach in your JavaScript applications. Here are just a few:

We have opted to run a dedicated prerendering service on our own servers, using Prerender.io.

Prerender.io

The general idea is to have the Prerender.io middleware installed on the servers rendering your applications. Middleware is just a fancy name for a package or a set of URL rewriting rules that check each request to see if it’s coming from a crawler. If it is a request from a crawler, the middleware will send a request to the prerendering service for the static HTML of that page.

If you are serving your applications from ASP.NET, the middleware can be a simple HTTP module. However, we are usually opting for the approach that uses URL rewriting rules. It can be used with Apache, Nginx, or any other server - the actual packages and instructions can be downloaded from here.

Now we need to install the Prerender.io service on our servers. Its source code is freely available, but the installation documentation is a bit terse. We will guide you through the process in the next post. In the meantime, please share your experiences with SEO strategies for JavaScript applications.